PostgreSQL Foreign Data Wrapper for JSON data from Snowplow API

This is not the first time I find myself wondering about the relationship between quite an overwhelming amount of available data and the amount of effort it takes to find a good “ready to go” data set. Why is it so hard? The data is there, but it never seems to fit my needs. The typical data analysis workflow bounces back and forth in between trying to apply a method to a data set coming from a source, noticing some weird behaviour, tracing it down to the data and trying to fix the problem just to bounce back onto a different, but very similar, problem. In short, it takes time to ensure that you have managed to get the data from the source to your analysis environment in the right form. In this blog, we are going to present a case study on how to build an interface which automatically takes care of all the conversions needed to make the data behave well!

Introduction

At the beginning we have an API which gathers GPS data information about the movements of the snowplow units in the city environment. The API is developed to gather just the most essential information: the timestamp, the location and the type of the operation the snowplow unit is currently operating on. The API gathers this information from all active snowplow units once in every five seconds so there is a lot of data. However, there are a few major issues which hinder the utilization of this data:

- There is a very limited selection of things you can query from the url based API

- The data is in JSON format

One of the biggest downfalls related to the first issue was that if a user wanted to know history information about snowplow units’ movements, one would have to specify the machine ID. It was not possible to query for the data rows which had been gathered during the latest five minute period. The only way to figure out this was to search for a specific number of latest records for every machine ID and then look at the timestamp of each record to see when the particular GPS information was gathered. As you can imagine, this is not an applicable way of doing things when there are almost one hundred snowplow units. Yet, that kind of information is very useful when you think of monitoring the process of plowing the snow off the streets.

In general, the second issue would not necessarily be a problem. However, in this case it is. The main reason for this is that the main point in the whole API is to gather information about snowplow’s whereabouts. It requires quite a wizard to recognize the name of the street the snowplow unit is moving along straight from the coordinate information. And wouldn’t it be nicer to see the location information on the map base? This is of course possible but the JSON data inside of the API is not the easiest data source for any Geographic Information System.

The need for utilizing GIS software is not just the fact that it adds a huge amount of value to the gathered location data but it also opens up possibilities for various kinds of spatial data analysis and data enrichment processes which can help to optimize the plowing operation in many ways. However, if we really want to make diverse and efficient use of geographic information, we should turn onto spatial databases. In addition to that, databases natively allow multiple users to exploit the data at the same time.

For the above mentioned reasons, our solution was based on the aim to transfer the data from the API to the PostGIS database. As a technical solution we utilized PostgreSQL (a free and open source relational database management system emphasizing extensibility and SQL compliance) and its spatial extension called PostGIS. Onto that, we tailored PostgreSQL’s Foreign Data Wrapper solution with the help of multicorn. With the help of another extension, pg_cron, we make it possible to run periodic jobs in PostgreSQL. As a result, we derived an interface which, at a user-defined frequency, goes to fetch the new data rows from API and inserts them into the PostGIS database. The PostGIS database is easy to access from any GIS software (like QGIS) and utilizing it does not require programming skills.

Development

The interface solution has been created with docker-compose. Docker is a tool which is planned for smoother creation, development and execution of various applications. Docker makes it possible to store the application and all its dependencies (packages, libraries etc.) into the same container. In addition to the fact that docker makes it easier to start using the application by storing all its pieces to the same parcel, docker also guarantees that the application will work in every Linux computer exactly the same way.

Once you have installed docker and docker-compose, install also PostgreSQL and PostGIS. Rest of the required installations include e.g. multicorn and plpygis (for Foreign Data Wrapper support for PostGIS). After the required dependencies have been installed, you still have to connect to your database and enable multicorn and pg_cron extensions. The complete documentation of the Snowplow FDW can be found at Github. Alternatively, you can just fork the Github repository and start using the application.

Logic of the interface

The logic of the actual interface is relatively simple. The API url is used as an option which gets delivered as a key when calling the Snowplow FDW application. From this key, the code searches for substrings which determine what kind of information we wish to fetch from the API. There are three alternatives: either we are fetching a metadata table, latest location information about the units or history information of a snowplow unit. By metadata table we refer to some kind of static information stored in the API like the table about possible machine types. The second alternative is related to a kind of “summary page” of the API which contains the table of each machine ID, its machine type and its latest location information. In both of these cases the code fetches the data from the given url address associated with the certain JSON column name and adds it to a variable which multicorn then utilizes in the creation process of the desired kind of PostGIS table.

With the third alternative, the procedure is a bit more complicated. Since our goal was to transform data into as practical form as possible, we do not want to fetch the history information of each snowplow unit into a distinct table but rather to create one massive data table with all the history information. Naturally, when doing this, we need to add one extra column to the table which specifies the snowplow unit ID a data row is related to.

Creating the FDW server

The Snowplow Foreign Data Wrapper server interface application can be created by executing one SQL statement:

CREATE SERVER dev_fdw FOREIGN DATA WRAPPER multicorn OPTIONS (wrapper 'snowplowfdw.SnowplowForeignDataWrapper');Creating metadata tables

If we wish to use Snowplow FDW for creating a metadata table about machine types we need to execute the following SQL statement:

CREATE FOREIGN TABLE types_temp( id integer, name varchar ) server dev_fdw options ( url '' );

After that we need to create a PostGIS table for storing the unit information fetched from the Snowplow API:

CREATE TABLE types( id integer, name varchar );

Finally we can insert the data from the foreign table to the types table:

INSERT INTO types( id, name)

SELECT id, name FROM types_temp;

Creating a scheduled task for updating history data table

The task of creating a scheduled task responsible for updating history information of the units can be split into two phases. In the first phase we have a scheduled task which updates the contents of a table storing “summary page” information. This allows us to find out which units have been active by comparing the latest timestamp of each unit to the moment when we previously run history data gathering scripts. If the latest timestamp has happened after the previous data gathering process, we update the latest location information of that snowplow unit in our “summary page” information table.

In the second phase of the scheduled task for updating history data table we utilize the up to date list of the active units. For every active snowplow unit, we construct the url for its history information and fetch a certain number of rows from there. The number of fetched rows depends on the frequency of the scheduled tasks. For example, if you want to gather history data once in every hour and we know that the API collects data at most in every five seconds, the theoretical upper limit for the amount of new data rows is 60×60/5=720. However, fetching a couple more extra rows does not hurt since the application checks for possible duplicate rows before adding them to the history data table.

Since both of these phases contain a bunch of logic in addition to just fetching the data from url, function scripts have been developed to take care of these tasks. The user can control the process via PgAdmin (or other PostgreSQL management and development platform). There a user can create the functions for updating both the “summary page” information table and the history data table. The functions are coded with plsql language (the scripts can be found in their entities from Github documentation) and inserted under cron schema. After that, the user needs to create the empty PostGIS tables for both “summary page” information and history table. The SQL statements needed for that are very similar to the ones needed when creating a metadata table and they can again be found in Github.

The scheduled tasks themselves are created, monitored and terminated with SQL statements like

— Execute the function one minute past every hour

SELECT cron.schedule('1 */1 * * *', $$select cron.updatefunction1()$$);

— Execute the function five minutes past every hour

SELECT cron.schedule('5 */1 * * *', $$select cron.updatefunction2()$$);

SELECT * FROM cron.job_run_details WHERE jobid=; SELECT cron.unschedule( );

Utilizing gathered data

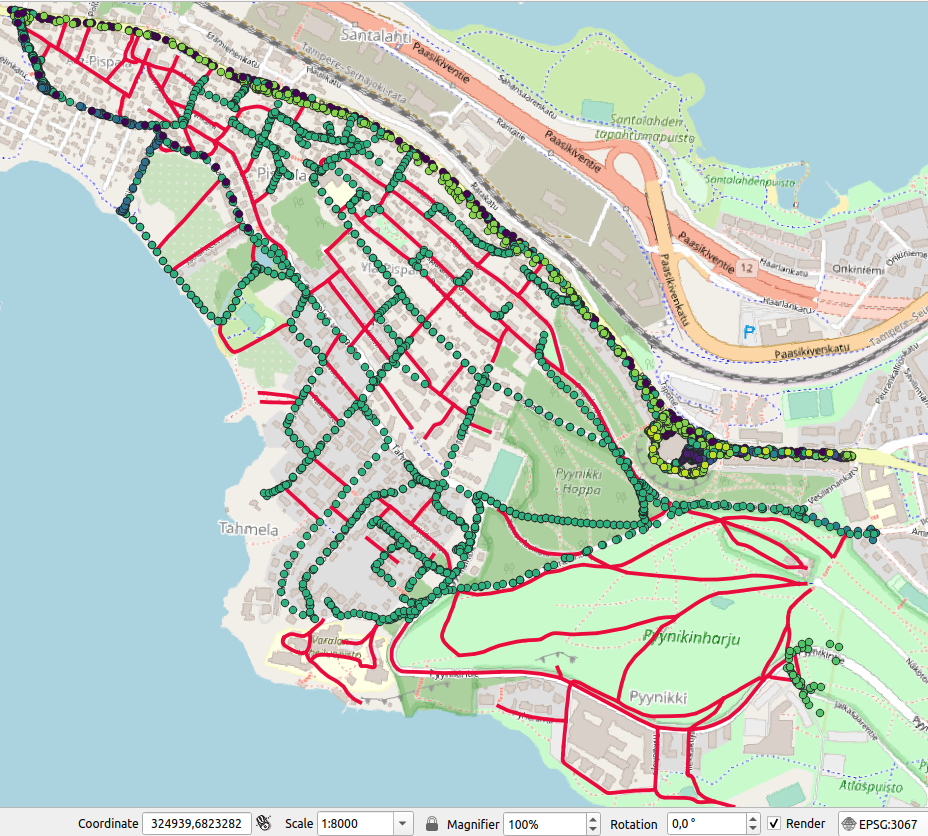

Now when we have created the FDW application which automatically fetches all the data gathered in the Snowplow API into PostGIS tables once an hour (or any other way; there are detailed instructions in Github on how to modify the frequency and start times of the periodically executed tasks), we can start to play with the data. Below you can see some examples which are produced with QGIS (free and open source GIS software).