Driving insights from point cloud data with WhiteboxTools and QGIS

Point cloud data is expensive to acquire. Lidar data is too often left aside as it is hard to analyze and you often need quite a bit of processing power to analyze it. Even if you get the data, you’ll still need to analyze it. Luckily, geospatial technologies are getting better. In this blog post, I’ll make a quick dive into a couple of use cases we could have for driving insights from point cloud data with open-source software.

As a dataset of interest, we’ll drive some insights from Lidar data from the National Land Survey of Finland (NLS Finland). NLS Finland published just last year some new highly accurate (5 points per m2) Lidar data, which I happen to have here for the analysis (you can download some of it as well).

Getting started with some postprocessing

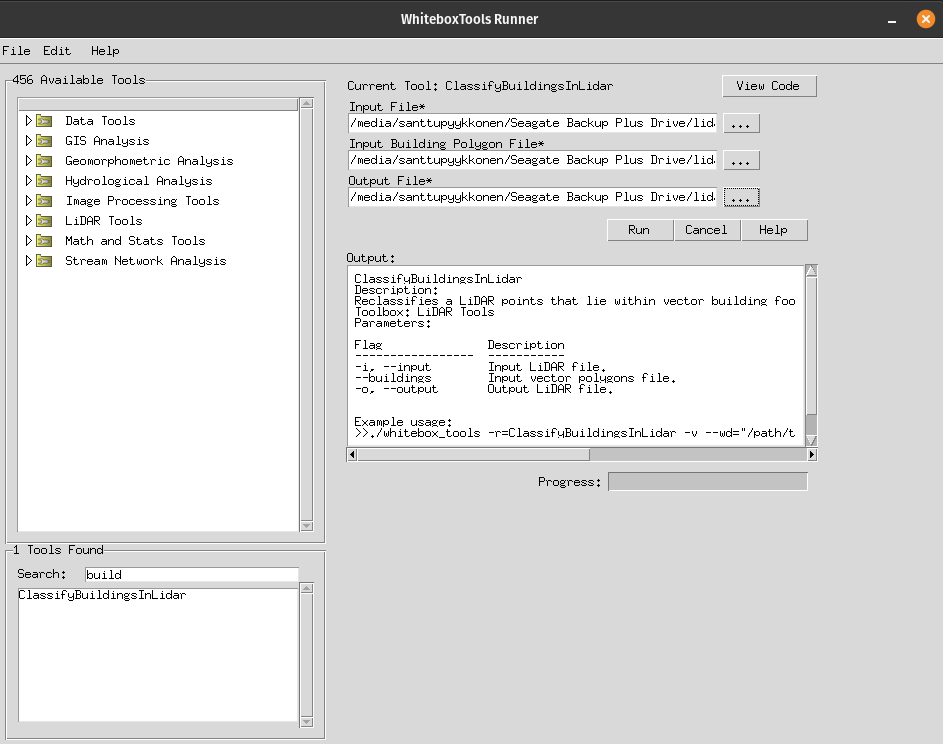

For starters, you can drag and drop the point cloud data to QGIS. While it’s loading, you might want to visit WhiteboxTools’ website at https://www.whiteboxgeo.com/. WhiteboxTools (WBT) is the set of tools we’ll use to process the point cloud data. For this blog post I used a small and simple GUI for WBT, called WhiteboxTools Runner:

You can download WBT from here and see the broad user manual here. You can also install a QGIS plugin (from Alexander Bruy), which permits you to use the tools from within QGIS.

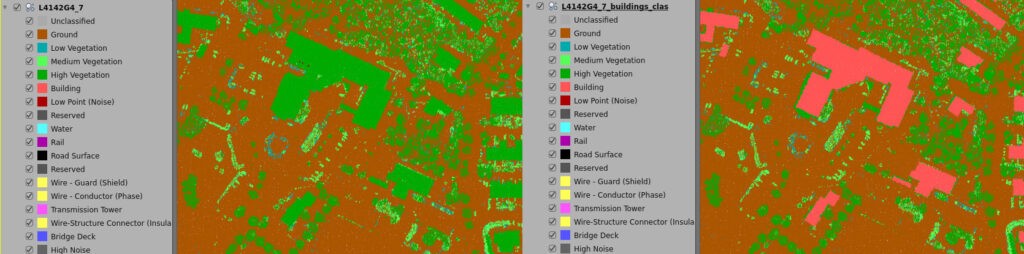

First, we want to classify the buildings since the original classification did not have the buildings classified. This is done with WBT’s ClassifyBuildingsInLidar. The tool ran very fast (~10 s.) having in mind that the point cloud dataset has more than 10 million points.

The image on the right is the result of classifying the buildings in the point cloud data. The algorithm needs polygon vector data on the buildings (footprints) which I had consumed from the NLS Finland (OGC API Features).

Detour on rooftop analysis with point cloud data

As we were already analyzing the buildings, we could make another analysis on the rooftops, on their slopes and aspects, e.g. for identifying where we could position our solar panels.

As a result, we’ll get a vector layer on the rooftops which holds information not only for the roofs as one but for the different sections of the roofs. The resulting vector layer holds tabular information on slope, aspect, and relevant numeric information.

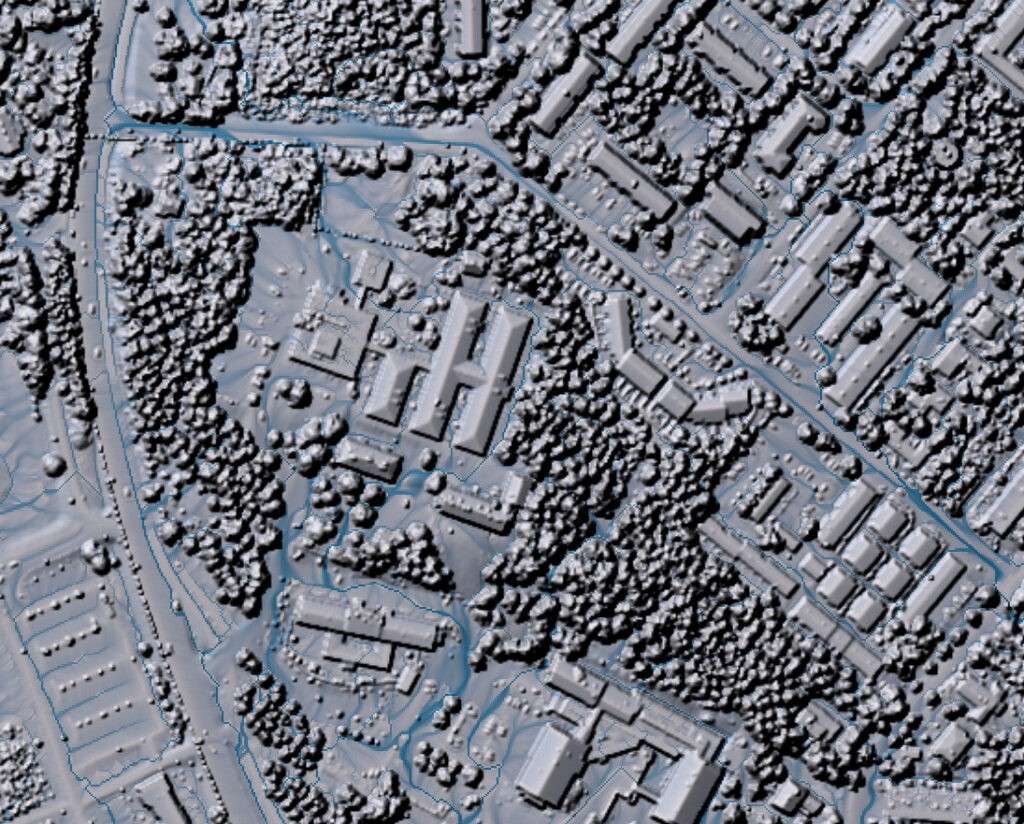

This is hugely useful, and again the tool worked highly efficiently. Please note as well that the Digital Surface Model (DSM) that you see in the latter map, below the colored rooftops, was also created with WBT from the same point cloud dataset.

Excluding Lidar classes

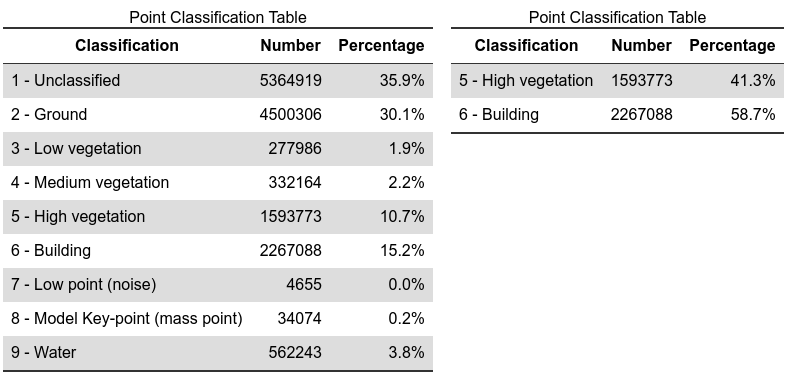

We ought to remember that these datasets are huge by nature. Thus, we should exclude the Lidar classes that do not hold information of our interest. We can do this with the FilterLidarClasses algorithm. For this exercise, we’ll use another dataset from Turku, Finland. This just recently published, highly accurate (30 points per m2!) Lidar data fitted nicely for our purposes. I excluded the majority of classes, and end up with 35 % of the size that this specific Lidar tile dataset holds (~ 15 million points).

Result of excluding some Lidar classes (roads, ground, low vegetation…)

There’s another useful tool in WhiteboxTools caller LidarInfo which gives you all this informational data on your Lidar datasets.

On the left side, results from the raw data; On the right side, results from the processed data:

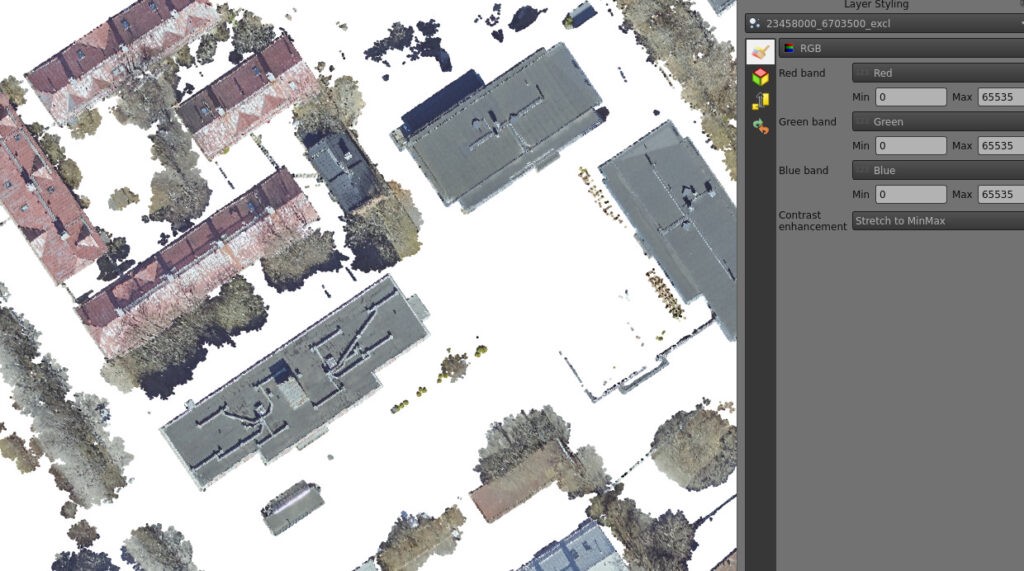

This is how we can filter only the classes we’re interested in. As the filtering was done, I’d drop the .laz file to QGIS and it rendered directly with the RGB values that were per default part of the data. QGIS visualizes the RGB values quite nicely as we can see below:

The filtered data visualized in QGIS (see the RGB config in the QGIS styling panel)

From manual processes to automated batch processing

As you remember, these datasets are huge and you do not want to process and analyze the datasets in a desktop environment, you want to take the datasets on server-side and batch process the data in a meaningful way to save time and processing power. WhiteboxTools offers a broad and flexible set of scripting environments for you to automate your workflows:

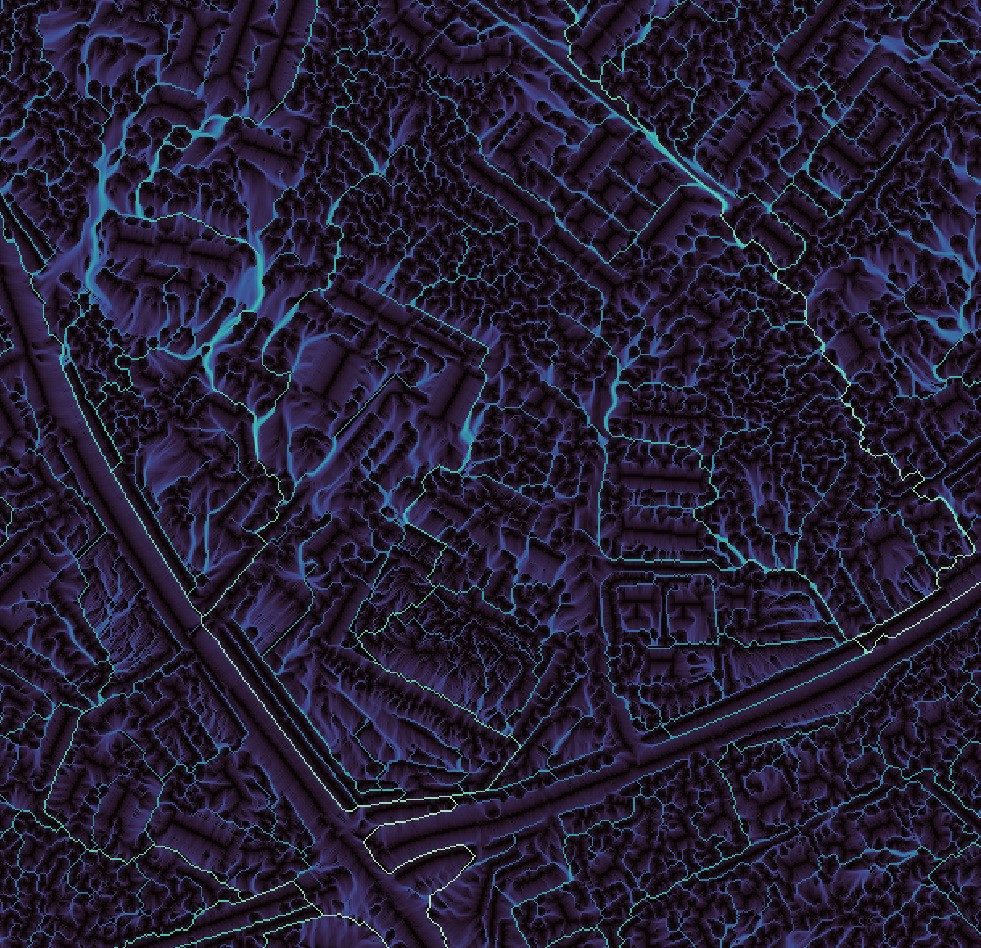

As an example, see the following results from a Python script that integrates 4 processing algorithms from WhiteboxTools, and saves the user (me!) all the trouble of manually going through the processes. And it worked great! This is how you get value out of Lidar data. You can explore the script on WhiteboxTools’s website.

The flow accumulation analysis used the same point cloud dataset as the sole input for the analysis.

Conclusions

WhiteboxTools is well worthy of your attention. In conclusion, Whiteboxtools works quite seamlessly and is a great set of tools for analyzing point cloud data as well for many other types of data. It also works neatly together with QGIS as a GIS environment for visualizing and analyzing Lidar together with other data types.

As the amount of point cloud data increases, analytical opportunities rise for those who are willing to explore innovative ways to analyze point clouds in a wider context with other geospatial data. Nowadays the data is not acquired just by planes, drones, and laser scanners, but also by phones (check out Polycam and SiteScape). As an organization or an analyst interested in driving insights out of point cloud data, you should prepare yourself with solid know-how and proper tooling.

Hope you’ve enjoyed this brief intro to the world of point cloud data, WhiteboxTools, and once again the all-mighty QGIS! If you’re interested in taking a deeper look into the opportunities there are for processing and analyzing point clouds, and you’re looking for a partner for the ride, consider reaching out (santtu@gispo.fi). Thank you for your interest!